Table of contents

Open Table of contents

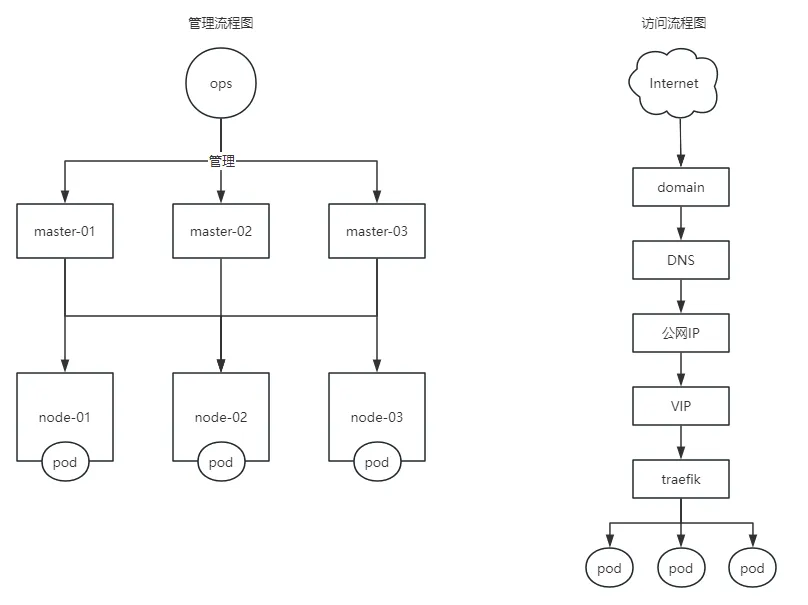

前言

本文使用 kubeadm 部署 Kubernetes Cluster,采用了外部etcd,适用于需要更高性能或单独管理etcd的场合。

文中涉及的IP需根据自己实际情况去修改,这里不使用变量是防止混淆,如果有内网dns并解析正常,也是可以使用配置的hostname。

架构图

环境

系统环境

具体资源分配根据业务需要配置,此为测试配置。

| Hostname | IP | VIP | System | Cpu | Memory |

| k8s-master-01 | 172.16.209.124 | 172.16.209.190 | Centos 7.9.2009 | 8 | 8 |

| k8s-master-02 | 172.16.209.125 | 8 | 8 | ||

| k8s-master-03 | 172.16.209.127 | 8 | 8 | ||

| k8s-node-01 | 172.16.209.181 | - | 16 | 16 | |

| k8s-node-02 | 172.16.209.182 | 16 | 16 | ||

| k8s-node-03 | 172.16.209.183 | 16 | 16 | ||

| k8s-etcd-01 | 172.16.209.184 | 8 | 8 | ||

| k8s-etcd-02 | 172.16.209.185 | 8 | 8 | ||

| k8s-etcd-03 | 172.16.209.187 | 8 | 8 |

软件环境

| Hostname | kubeadm | kubelet | kubectl | docker | cri-dockerd |

| k8s-master-01 | v1.28.2 | v1.28.2 | v1.28.2 | 19.03.9 | 0.3.7 |

| k8s-master-02 | |||||

| k8s-master-03 | |||||

| k8s-node-01 | - | ||||

| k8s-node-02 | |||||

| k8s-node-03 | |||||

| k8s-etcd-01 | |||||

| k8s-etcd-02 | |||||

| k8s-etcd-03 |

初始化系统环境

所有节点都要执行

关闭防火墙

systemctl disable firewalld && systemctl stop firewalld

关闭selinux

sed -i 's#SELINUS\=enforcing#SELINUS\=disabled#g' /etc/selinus/config

setenforce 0

关闭swap

sed -i "s/^[^#]*swap*/#&/g" /etc/fstab

swapoff

free -m

内核配置

内核文件:/etc/sysctl.conf

net.ipv4.tcp_keepalive_time=600

net.ipv4.tcp_keepalive_intvl=30

net.ipv4.tcp_keepalive_probes=10

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1

net.ipv4.neigh.default.gc_stale_time=120

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.arp_announce=2

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_announce=2

net.ipv4.ip_local_port_range= 45001 65000

net.ipv4.ip_forward=1

net.ipv4.tcp_max_tw_buckets=6000

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_synack_retries=2

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.netfilter.nf_conntrack_max=2310720

net.ipv6.neigh.default.gc_thresh1=8192

net.ipv6.neigh.default.gc_thresh2=32768

net.ipv6.neigh.default.gc_thresh3=65536

net.core.netdev_max_backlog=16384

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_max_syn_backlog = 8096

net.core.somaxconn = 32768

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=524288

fs.file-max=52706963

fs.nr_open=52706963

kernel.pid_max = 4194303

net.bridge.bridge-nf-call-arptables=1

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

vm.max_map_count = 262144

更新软件源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

查看软件版本

yum —showduplicates list kubeadm

ps: 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y —nogpgcheck kubelet kubeadm kubectl 安装

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

配置host解析

修改每个节点上的 /etc/hosts 文件,添加如下内容,有内网dns的话,解析好的就不用配置,确保ping hostname 能解析就ok。

172.16.209.124 k8s-master-01

172.16.209.125 k8s-master-02

172.16.209.127 k8s-master-03

172.16.209.184 k8s-etcd-01

172.16.209.185 k8s-etcd-02

172.16.209.187 k8s-etcd-03

172.16.209.181 k8s-node-01

172.16.209.182 k8s-node-02

172.16.209.183 k8s-node-03

配置时间同步

由于做镜像时时间同步是做好的,此处不做配置,有内部的时间同步配置内置的就好,没有就配置阿里云或者Google的。

k8s 初始化准备

- 在 master 节点安装 kubeadm 、kubelet、kubectl、docker、cri-docker 软件。

- 在 node、etcd 节点安装 kubeadm 、kubelet、docker、cri-docker 软件。

- 所有节点加载ipvs模块(可选,如用iptable则不需要开启)

- master节点生成kube-vip yaml文件,并拷贝到其他两个master节点上

- master、node 节点拉取所有镜像,etcd 节点拉取etcd镜像

- 所有节点清空iptable规则

安装k8s组件

# master 节点

yum -y install --nogpgcheck kubeadm-1.28.2-0 kubectl-1.28.2-0 kubelet-1.28.2-0

# node、etcd 节点

yum -y install --nogpgcheck kubeadm-1.28.2-0 kubelet-1.28.2-0

安装cri-docker

# 下载

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.7/cri-dockerd-0.3.7.amd64.tgz

# 解压

tar -xzvf cri-dockerd-0.3.7.amd64.tgz -C /usr/local/share/

# 创建软连接

ln -sv /usr/local/share/cri-dockerd/cri-dockerd /usr/local/bin/

# 创建 service 文件

cat >> /usr/lib/systemd/system/cri-dockerd.service << EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --cri-dockerd-root-directory=/var/lib/dockershim --docker-endpoint=unix:///var/run/docker.sock --cri-dockerd-root-directory=/var/lib/docker

ExecReload=/bin/kill -s HUP \$MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 创建 socket 文件

cat >> /usr/lib/systemd/system/cri-dockerd.socket << EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=/var/run/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

# 重启并加入开机自启动计划

systemctl enable --now cri-dockerd cri-dockerd.socket

安装ipvs模块(可选)

# 加载内核模块

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

yum install -y ipset ipvsadm

# 检查模块

lsmod | grep -e ip_vs -e nf_conntrack

生成 kube-vip.yaml 文件

# 拉取镜像

docker pull docker.io/plndr/kube-vip:v0.3.8

# 创建目录

mkdir -p /etc/kubernetes/manifests/

# 生成 kube-vip.yaml 文件

docker run --rm --net=host docker.io/plndr/kube-vip:v0.6.2 manifest pod \

--interface eth0 \

--vip 172.16.209.190 \

--controlplane \

--services \

--arp \

--enableLoadBalancer \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

返回结果

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

name: kube-vip

namespace: kube-system

spec:

containers:

- args:

- manager

env:

- name: vip_arp

value: "true"

- name: vip_interface

value: eth0

- name: port

value: "6443"

- name: vip_cidr

value: "32"

- name: cp_enable

value: "true"

- name: cp_namespace

value: kube-system

- name: vip_ddns

value: "false"

- name: svc_enable

value: "true"

- name: vip_leaderelection

value: "true"

- name: vip_leaseduration

value: "5"

- name: vip_renewdeadline

value: "3"

- name: vip_retryperiod

value: "1"

- name: vip_address

value: 172.16.209.190

image: ghcr.io/kube-vip/kube-vip:v0.3.8

imagePullPolicy: Always

name: kube-vip

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

- SYS_TIME

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

status: {}

拷贝kube-vip yaml文件其他两个master节点上

# 拷贝到 master-02

scp /etc/kubernetes/manifests/kube-vip.yaml [email protected]:

ssh [email protected]

sudo -i

mv /home/ops/kube-vip.yaml /etc/kubernetes/manifests/

chown root.root /etc/kubernetes/manifests/kube-vip.yaml

ll /etc/kubernetes/manifests/kube-vip.yaml

# 拷贝到 master-03

scp /etc/kubernetes/manifests/kube-vip.yaml [email protected]:

ssh [email protected]

sudo -i

mv /home/ops/kube-vip.yaml /etc/kubernetes/manifests/

chown root.root /etc/kubernetes/manifests/kube-vip.yaml

ll /etc/kubernetes/manifests/kube-vip.yaml

拉取镜像

查看对应版本需要的镜像

kubeadm config images list --kubernetes-version v1.28.2

以下是 v1.28.2 版本需要的镜像

registry.k8s.io/kube-apiserver:v1.28.2

registry.k8s.io/kube-controller-manager:v1.28.2

registry.k8s.io/kube-scheduler:v1.28.2

registry.k8s.io/kube-proxy:v1.28.2

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.9-0

registry.k8s.io/coredns/coredns:v1.10.1

拉取镜像,从阿里云拉取

# master、node 节点

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.28.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.28.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.28.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.28.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1

# ectd 节点

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.9-0

清空iptable规则

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

外置 Etcd 准备

只在etcd节点操作 前提条件:每台主机必须安装有容器运行时、docker、kubelet 和 kubeadm 如果要看官方文档,请看英文文档,否则某些yaml文件的格式有误

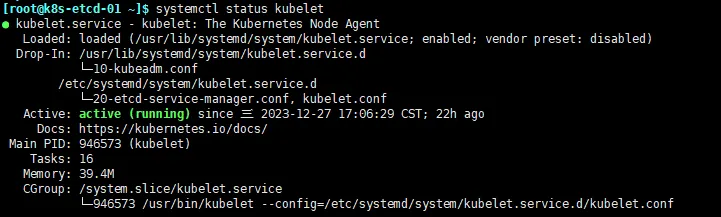

- 所有节点必须正常启动kubelet,再向下执行,否则白费

- 在其中一个节点生成所有节点的kubeadm配置文件,(习惯node-01节点执行)

- 生成证书颁发机构(生成kubeadm配置文件的节点执行)

- 为每个成员创建证书并移动到对应节点的对应目录下(在生成证书颁发机构的节点执行,本机最后执行)

- 修改etcd证书过期时间,默认为1年,我们需要改长点

- 在各自节点生成静态 pod 清单文件

- 检查etcd集群

外置etcd有两种方式,一种是官方文档的生成pod清单文件,kubelet调度启动pod(容器化),另一种是虚拟机直接部署etcd服务(虚拟化),这里用的是第一种,第二种不做详解

官方地址:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/setup-ha-etcd-with-kubeadm/

启动kubelet

由于 etcd 是首先创建的,因此你必须通过创建具有更高优先级的新文件来覆盖 kubeadm 提供的 kubelet 单元文件

cat << EOF > /etc/systemd/system/kubelet.service.d/kubelet.conf

# Replace "systemd" with the cgroup driver of your container runtime. The default value in the kubelet is "cgroupfs".

# Replace the value of "containerRuntimeEndpoint" for a different container runtime if needed.

#

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

authentication:

anonymous:

enabled: false

webhook:

enabled: false

authorization:

mode: AlwaysAllow

cgroupDriver: cgroupfs

address: 127.0.0.1

containerRuntimeEndpoint: unix:///var/run/cri-dockerd.sock

staticPodPath: /etc/kubernetes/manifests

EOF

cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf

[Service]

ExecStart=

ExecStart=/usr/bin/kubelet --config=/etc/systemd/system/kubelet.service.d/kubelet.conf

Restart=always

EOF

systemctl daemon-reload

systemctl enable --now kubelet

启动正常如图所示

生成kubeadm配置文件

执行脚本生成kubeadm配置文件,将以下内容放在shell脚本文件执行即可,根据需求修改 ip 和 hostname 执行完成后会在 /tmp/ 目录下生成对应ip的文件夹,里面有一个对应 ip 的配置文件

# 使用你的主机 IP 更新 HOST0、HOST1 和 HOST2 的 IP 地址

export HOST0=172.16.209.184

export HOST1=172.16.209.185

export HOST2=172.16.209.187

# 使用你的主机名更新 NAME0、NAME1 和 NAME2

export NAME0="k8s-etcd-01"

export NAME1="k8s-etcd-02"

export NAME2="k8s-etcd-03"

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/

HOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=(${NAME0} ${NAME1} ${NAME2})

for i in "${!HOSTS[@]}"; do

HOST=${HOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/${HOST}/kubeadmcfg.yaml

---

apiVersion: "kubeadm.k8s.io/v1beta3"

kind: InitConfiguration

nodeRegistration:

name: ${NAME}

criSocket: /var/run/cri-dockerd.sock

localAPIEndpoint:

advertiseAddress: ${HOST}

---

apiVersion: "kubeadm.k8s.io/v1beta3"

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

etcd:

local:

serverCertSANs:

- "${HOST}"

peerCertSANs:

- "${HOST}"

extraArgs:

initial-cluster: ${NAMES[0]}=https://${HOSTS[0]}:2380,${NAMES[1]}=https://${HOSTS[1]}:2380,${NAMES[2]}=https://${HOSTS[2]}:2380

initial-cluster-state: new

name: ${NAME}

listen-peer-urls: https://${HOST}:2380

listen-client-urls: https://${HOST}:2379

advertise-client-urls: https://${HOST}:2379

initial-advertise-peer-urls: https://${HOST}:2380

EOF

done

生成证书颁发机构

kubeadm init phase certs etcd-ca

执行成功后创建两个文件:

- /etc/kubernetes/pki/etcd/ca.crt

- /etc/kubernetes/pki/etcd/ca.key

为每个节点创建证书

为 k8s-etcd-02 节点创建证书

kubeadm init phase certs etcd-server --config=/tmp/172.16.209.185/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/172.16.209.185/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/172.16.209.185/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/172.16.209.185/kubeadmcfg.yaml

cp -R /etc/kubernetes/pki /tmp/172.16.209.185/

# 清理不可重复使用的证书

find /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -delete

为 k8s-etcd-03 节点创建证书

kubeadm init phase certs etcd-server --config=/tmp/172.16.209.187/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/172.16.209.187/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/172.16.209.187/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/172.16.209.187/kubeadmcfg.yaml

cp -R /etc/kubernetes/pki /tmp/172.16.209.187/

# 清理不可重复使用的证书

find /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -delete

为 k8s-etcd-01 节点创建证书 本机节点不需要移动certs,本机节点最后一步执行

kubeadm init phase certs etcd-server --config=/tmp/172.16.209.184/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/172.16.209.184/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/172.16.209.184/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/172.16.209.184/kubeadmcfg.yaml

移动证书到对应节点 移动 k8s-etcd-02 证书

scp -r /tmp/172.16.209.185/* [email protected]:

# 登录远程节点,移动文件都对应目录下

ssh [email protected]

# 以下为登录成功后执行的命令

sudo -i

mv /home/ops/kubeadmcfg.yaml /root/

mv /home/ops/pki/ /etc/kubernetes/

chown -R root:root /etc/kubernetes/pki

移动 k8s-etcd-03 证书

scp -r /tmp/172.16.209.187/* [email protected]:

# 登录远程节点,移动文件都对应目录下

ssh [email protected]

# 以下为登录成功后执行的命令

sudo -i

mv /home/ops/kubeadmcfg.yaml /root/

mv /home/ops/pki/ /etc/kubernetes/

chown -R root:root /etc/kubernetes/pki

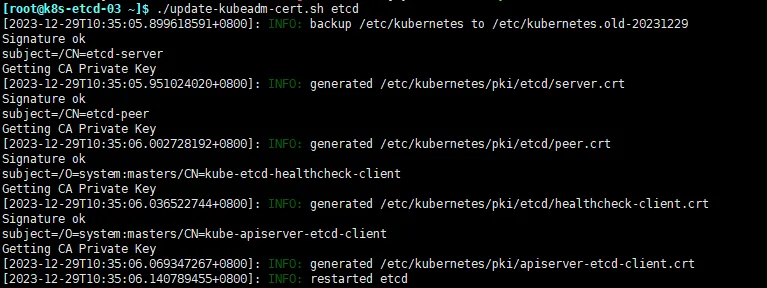

添加 update-kubeadm-cert.sh 脚本,修改etcd证书过期时间

KUBE_PATH:k8s目录地址

CAER_DAYS:设置证书过期时间,默认36500

添加执行权限

chmod a+x update-kubeadm-cert.sh

执行脚本

./update-kubeadm-cert.sh etcd

成功结果如下

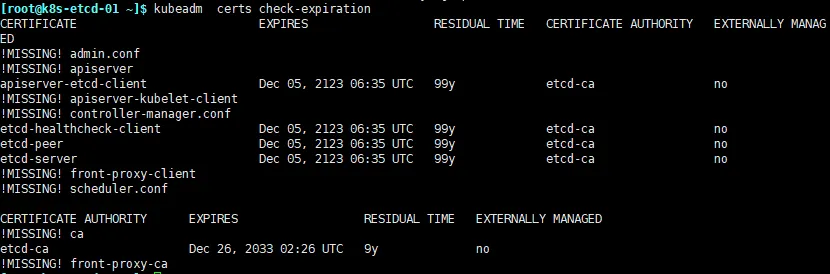

查看etcd证书过期时间,3个节点都看一下

kubeadm certs check-expiration

清理除颁发机构以外的 ca.key

在除了有颁发机构以外的etcd节点执行以下命令 也就是删除其他两个etcd节点上的颁发机构key 本文的颁发机构生成在etcd-01,所以要去etcd-02和etcd-03上删除

find /etc/kubernetes/pki/ -name ca.key -type f -delete

最后,目录结构如下,确保一致 k8s-etcd-01

/tmp/172.16.209.184

└── kubeadmcfg.yaml

---

/etc/kubernetes/pki

├── apiserver-etcd-client.crt

├── apiserver-etcd-client.key

└── etcd

├── ca.crt

├── ca.key

├── healthcheck-client.crt

├── healthcheck-client.key

├── peer.crt

├── peer.key

├── server.crt

└── server.key

k8s-etcd-02

/root

└── kubeadmcfg.yaml

---

/etc/kubernetes/pki

├── apiserver-etcd-client.crt

├── apiserver-etcd-client.key

└── etcd

├── ca.crt

├── healthcheck-client.crt

├── healthcheck-client.key

├── peer.crt

├── peer.key

├── server.crt

└── server.key

k8s-etcd-03

/root

└── kubeadmcfg.yaml

---

/etc/kubernetes/pki

├── apiserver-etcd-client.crt

├── apiserver-etcd-client.key

└── etcd

├── ca.crt

├── healthcheck-client.crt

├── healthcheck-client.key

├── peer.crt

├── peer.key

├── server.crt

└── server.key

创建静态 Pod 清单

k8s-etcd-01

kubeadm init phase etcd local --config=/tmp/172.16.209.184/kubeadmcfg.yaml

k8s-etcd-02

kubeadm init phase etcd local --config=/root/kubeadmcfg.yaml

k8s-etcd-03

kubeadm init phase etcd local --config=/root/kubeadmcfg.yaml

重启kubelet,并检查etcd集群运行状况

systemctl restart kubelet

在每台节点上执行

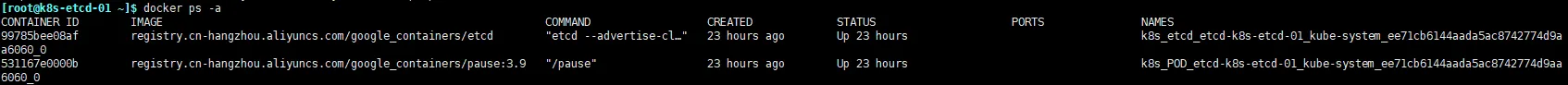

docker ps -a 都能看到如图两个容器

检查etcd集群状态

# 查看集群的所有节点和状态

ETCDCTL_API=3 etcdctl --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key --cacert /etc/kubernetes/pki/etcd/ca.crt --endpoints https://172.16.209.184:2379 member list -w table

# 查看节点状态

ETCDCTL_API=3 etcdctl --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key --cacert /etc/kubernetes/pki/etcd/ca.crt --endpoints https://172.16.209.187:2379,https://172.16.209.185:2379,https://172.16.209.184:2379 endpoint status -w table

复制etcd证书文件到控制平面节点

选择其中一个etcd节点的证书文件拷贝到进行初始化的节点 这里是 etcd-01 拷贝到 master-01

scp /etc/kubernetes/pki/etcd/ca.crt ops@k8s-master-01:

scp /etc/kubernetes/pki/apiserver-etcd-client.crt ops@k8s-master-01:

scp /etc/kubernetes/pki/apiserver-etcd-client.key ops@k8s-master-01:

ssh ops@k8s-master-01

sudo -i

mkdir -p /etc/kubernetes/pki/etcd

mv /home/ops/apiserver-etcd-client.* /etc/kubernetes/pki/

mv /home/ops/ca.crt /etc/kubernetes/pki/etcd

chown root.root /etc/kubernetes/pki/apiserver-etcd-client.*

chown root.root /etc/kubernetes/pki/etcd/ca.crt

初始化节点

在master节点上的其中一个执行此步骤(本文档是在master-01节点操作) 初始化配置文件根据实际情况修改,使用的时候建议把注释去掉,避免 yaml 格式错误 添加初始化配置文件 kubeadm-init-config.yaml ,内容如下

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.209.124 # 本机地址

bindPort: 6443 # api 端口

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock # cri

imagePullPolicy: IfNotPresent # 镜像拉取规则

name: k8s-master-01 # 本机hostname

taints: null

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # 设置模式为ipvs

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki # 证书目录

clusterName: kubernetes # 集群名称

controlPlaneEndpoint: 172.16.209.190:6443 # 集群api接口地址和端口

controllerManager: {}

dns: {}

etcd: # etcd设置

external: # 外部

endpoints: # 设置etcd外部端点

- https://172.16.209.184:2379

- https://172.16.209.185:2379

- https://172.16.209.187:2379

caFile: /etc/kubernetes/pki/etcd/ca.crt # ca文件

certFile: /etc/kubernetes/pki/apiserver-etcd-client.crt # etcd客户端crt

keyFile: /etc/kubernetes/pki/apiserver-etcd-client.key # etcd客户端key

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers # 默认拉取仓库

kind: ClusterConfiguration

kubernetesVersion: 1.28.2 # 版本

networking:

dnsDomain: ydzf.local # k8s内部域名

podSubnet: 172.10.0.0/16 # pod ip 地址范围

serviceSubnet: 192.168.1.0/20 # service 网络地址范围

scheduler: {}

初始化命令

kubeadm init --config=kubeadm-init-config.yaml --upload-certs

成功结果如下

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 172.16.209.190:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6bf0b46b12c8e6dd2e01f9805cf5c4bd9b226f3f9faea7e9b346044baa71b542 \

--control-plane --certificate-key 50d07e65f9597eb4d6a437a68e8045282dce6d7831b18356014711501f90b9d6

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.209.190:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6bf0b46b12c8e6dd2e01f9805cf5c4bd9b226f3f9faea7e9b346044baa71b542

配置命令使用

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

yum -y install bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> /root/.bashrc

查看proxy模式

kubectl get configmap kube-proxy -n kube-system -o yaml | grep mode

![]() 查看kube-vip是否启动成功

查看kube-vip是否启动成功

kubectl get pod -A|grep "kube-vip-k8s"

![]() 查看master是否有vip

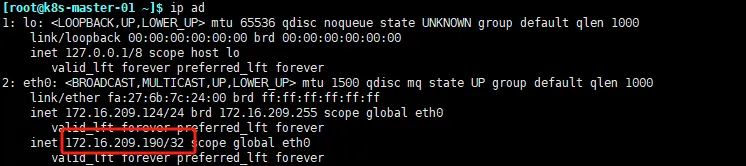

查看master是否有vip

ip ad

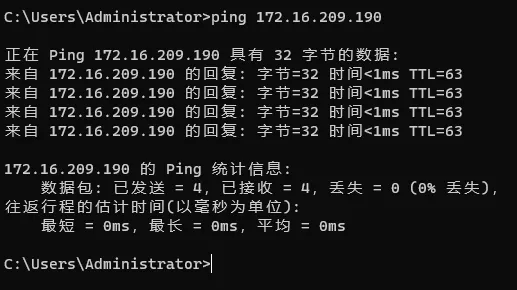

测试vip是否可用

测试vip是否可用

添加master节点

在另外两个master执行如下join命令,命令来源于初始化成功的输出内容,请使用当时的token

kubeadm join 172.16.209.190:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6bf0b46b12c8e6dd2e01f9805cf5c4bd9b226f3f9faea7e9b346044baa71b542 \

--control-plane --certificate-key 50d07e65f9597eb4d6a437a68e8045282dce6d7831b18356014711501f90b9d6 --cri-socket=unix:///var/run/cri-dockerd.sock

成功结果如下

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

配置命令使用

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

yum -y install bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> /root/.bashrc

打master标签

kubectl label node k8s-master-01 node-role.kubernetes.io/master=

kubectl label node k8s-master-02 node-role.kubernetes.io/master=

kubectl label node k8s-master-03 node-role.kubernetes.io/master=

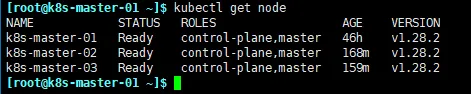

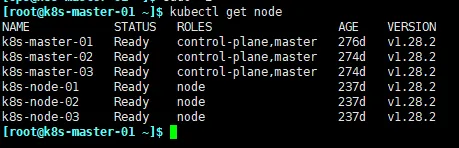

查看节点

kubectl get node

修改master节点证书过期时间

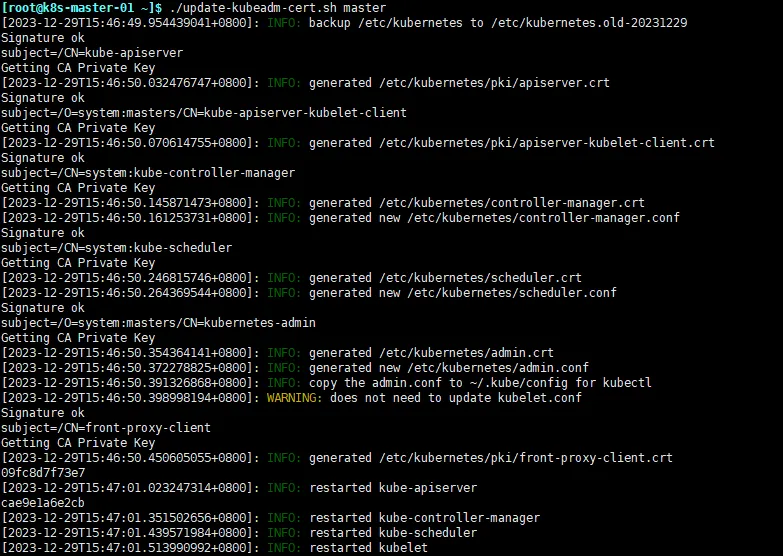

在所有master节点操作 参照修改etcd证书过期时间步骤,脚本一样,执行时把etcd改为master即可 执行命令如下

./update-kubeadm-cert.sh master

成功结果如下图

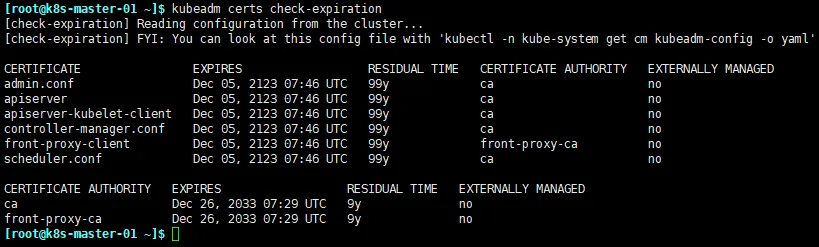

查看当前证书过期时间

kubeadm certs check-expiration

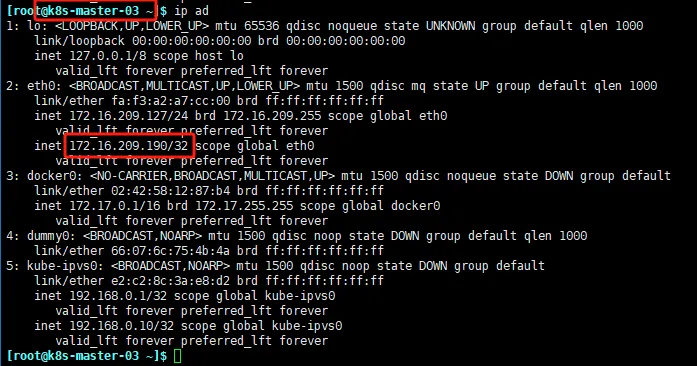

验证kube-vip功能

查看3台master网卡,找到vip在哪里

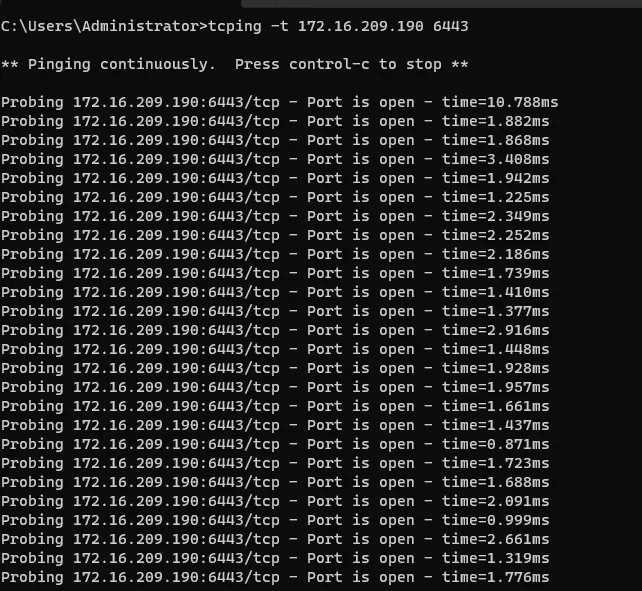

长 tcping 端口,这里用的是 Windows 长 tcping

重启 vip 所在节点服务器,观察是否有丢包或者超时,pod是否正常,观察pod日志

reboot

结果

- 在长 ping 设置为1s一次的时候,并不会丢包,也不会有超时,无感知切换vip

- Vip 跟随 master 领导者更换,即 vip 会跟随k8s的领导者进行漂移

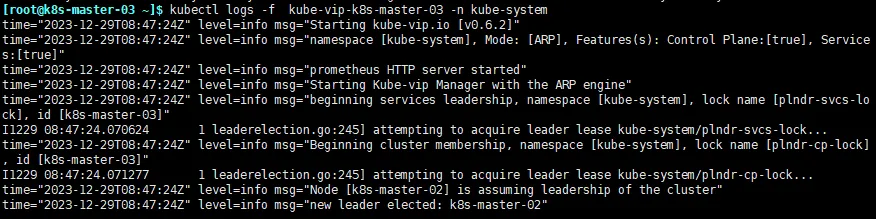

- 重启的节点会在启动成功的时候重新加入集群,并告知当前领导者的更换,日志如下

- 查看其他两个节点的日志,也是一样,会提示新的领导者是 k8s-master-02

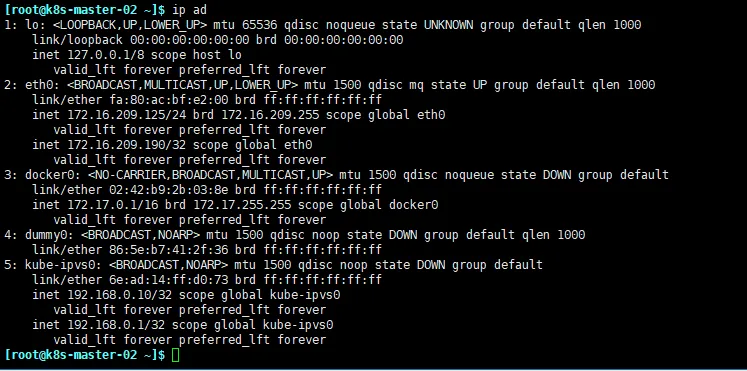

- 查看 k8s-master-02 网卡详情会看到vip已经过来了

启动 cni

cni使用calico 添加 calico yaml文件

启动cni

kubectl apply -f calico.yaml

启动 traefik

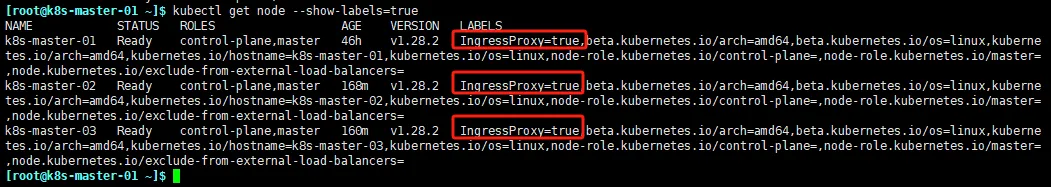

给启动节点打标签,一般是给master节点

kubectl label nodes k8s-master-01 IngressProxy=true

kubectl label nodes k8s-master-02 IngressProxy=true

kubectl label nodes k8s-master-03 IngressProxy=true

查看

kubectl get node --show-labels=true

添加 traefik yaml 文件,点击文件即可下载

添加 traefik yaml 文件,点击文件即可下载

启动 traefik

kubectl apply -f 00-Resource-Definition.yaml -f 01-rbac.yaml -f 02-traefik-config.yaml -f 03-traefik.yaml -f 04-ingressroute.yaml

添加node节点

由于token过期了,需要重新生成token

kubeadm token create

执行命令后会返回一串字符,就是token name

uxooxk.eipu6gji0f61cqwr

查看discovery-token-ca-cert-hash

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

执行后生成一串字符就是 discovery-token-ca-cert-hash sha256算法编码内容

6bf0b46b12c8e6dd2e01f9805cf5c4bd9b226f3f9faea7e9b346044baa71b542

最后拼接起来得join命令如下

kubeadm join 172.16.209.190:6443 --token uxooxk.eipu6gji0f61cqwr --discovery-token-ca-cert-hash sha256:6bf0b46b12c8e6dd2e01f9805cf5c4bd9b226f3f9faea7e9b346044baa71b542 --cri-socket=unix:///var/run/cri-dockerd.sock

在3台node上执行join命令,结果如下

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

打node标签

kubectl label node k8s-node-01 node-role.kubernetes.io/node=

kubectl label node k8s-node-02 node-role.kubernetes.io/node=

kubectl label node k8s-node-03 node-role.kubernetes.io/node=

等待node节点初始化完成后,查看node节点

清空所有退出状态容器

docker ps -a|grep Exited|awk {'print $1'}|xargs docker rm

至此,kubernetes 集群部署完成。